Researchers said in a report on Wednesday that image creation tools powered by artificial intelligence from companies including OpenAI and Microsoft can produce photos that could promote election or voting-related disinformation, despite each having policies against creating misleading content.

The Centre for Countering Digital Hate (CCDH), a nonprofit that monitors online hate speech, used generative AI tools to create images of US President Joe Biden lying in a hospital bed and election workers smashing voting machines, raising worries about falsehoods ahead of the US presidential election in November.

“The potential for such AI-generated images to serve as ‘photo evidence’ could exacerbate the spread of false claims, posing a significant challenge to preserving the integrity of elections,” CCDH researchers said in the report.

CCDH tested OpenAI’s ChatGPT Plus, Microsoft’s Image Creator, Midjourney, and Stability AI’s DreamStudio, which can each generate images from text prompts.

The report follows an announcement last month that OpenAI, Microsoft, and Stability AI were among 20 tech companies that signed an agreement to work together to prevent deceptive AI content from interfering with elections taking place globally this year. Midjourney was outside the initial group of signatories.

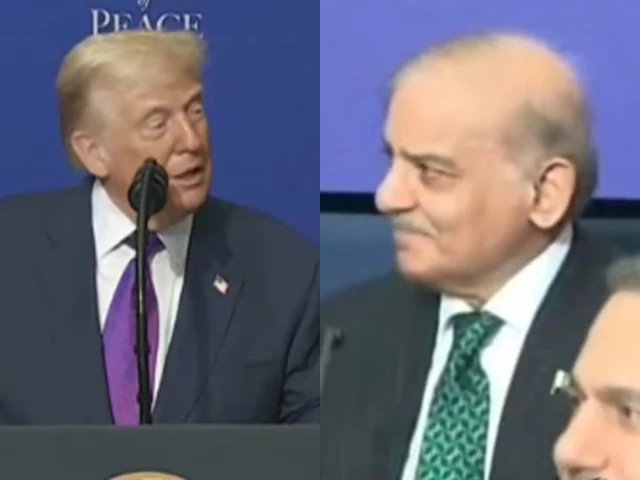

CCDH said the AI tools generated images in 41 percent of the researchers’ tests and were most susceptible to prompts that asked for photos depicting election fraud, such as voting ballots in the trash, rather than images of Biden or former US President Donald Trump.

The report said ChatGPT Plus and Image Creator were successful at blocking all prompts when asked for images of candidates.

However, Midjourney performed the worst of all the tools, generating misleading images in 65 percent of the researchers’ tests, the report said.